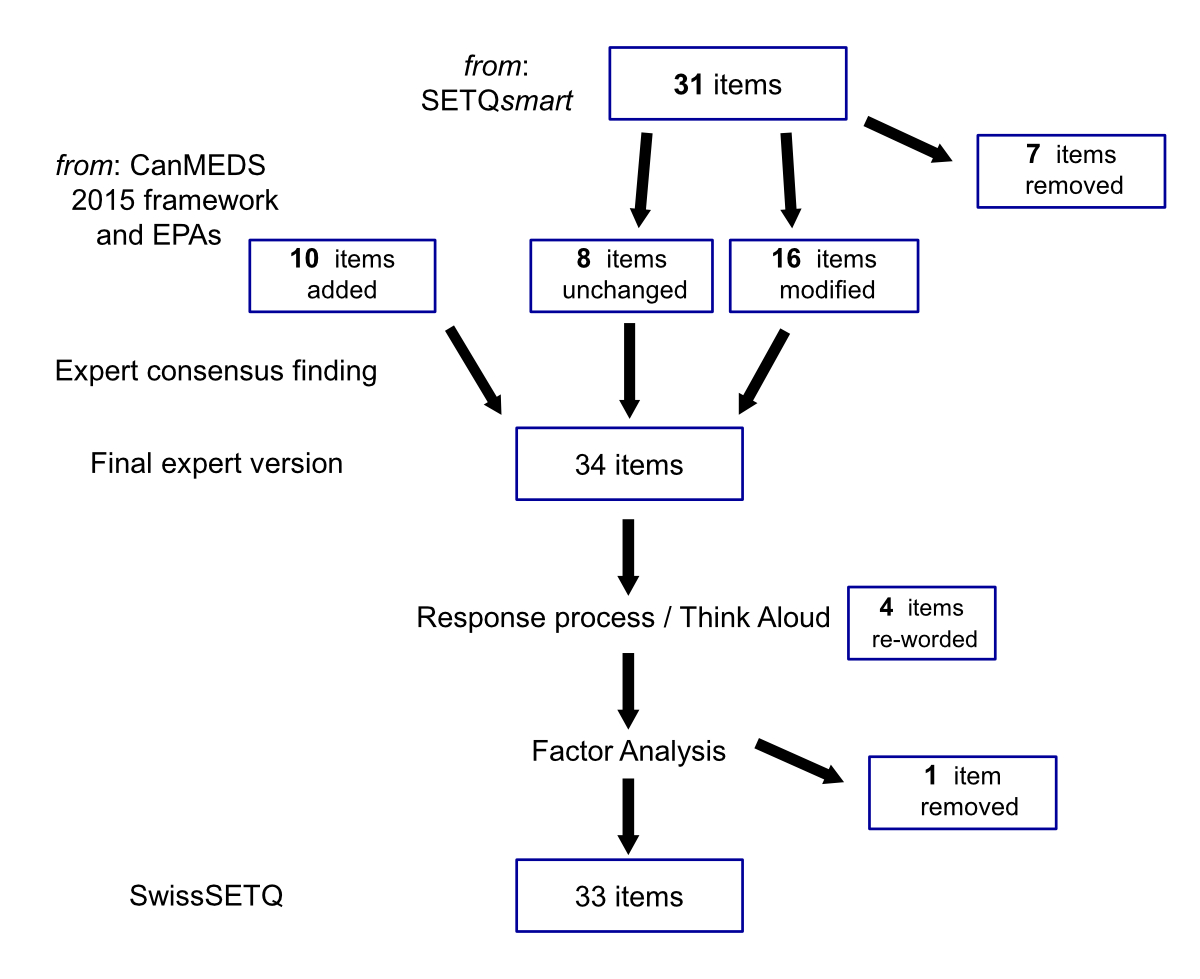

Figure 1 Flow-chart of item handling for the final SwissSETQ instrument.

DOI: https://doi.org/10.4414/SMW.2022.w30137

System for Evaluation of Teaching Qualities ‘smart’

Swiss System for Evaluation of Teaching Qualities

Canadian Medical Educational Directives for Specialtists

Entrustable Professional Activities

Kaiser-Meyer-Olkin test

Stanford Faculty Development Program-26[item] instrument

Standard error of measurement

Analysis of Variance

The quality of teaching in graduate medical education is crucial in preparing trainees for independent practice and future healthcare challenges [1]. One fundamental strategy to improve teaching competencies is to give teachers specific, reliable and meaningful feedback [2, 3], ideally provided by the recipients of the teaching (bottom-up feedback). The ultimate goal of this feedback is to support the development of teachers in the sense of assessment for learning [4].

An easy and (mostly) hierarchy-free way of providing such feedback is by using anonymous online questionnaires [5, 6]. Several instruments for this have been developed in the past, yet most instruments either did not include all aspects of clinical teaching or lacked a formal validation [7]. However, one instrument, SETQsmart (System for Evaluation of Teaching Qualities) [8], has become well established and has been extensively validated and updated over the years [9–11], in particular in anaesthesiology training [8]. SETQsmart has the additional advantage that it describes specific and observable teaching behaviours. Providing explicit information to clinical supervisors (who are not typically experts in education) makes it more likely that users will perceive the tool as useful and credible [12, 13].

SETQsmart was an updated version of the original SETQ instrument [9], which itself was built on the validated tool SFDP-26 (Stanford Faculty Development Program) [14, 15]. SETQsmart additionally included the CanMEDS (Canadian Medical Educational Directives for Specialtists) 2005 framework [16], the Accreditation Council for Graduate Medical Education principles [17], key propositions from the Lancet Report on the future training of the health care force [18], and the ‘Teaching as a competency’ framework [19]. It did not, however, incorporate two important recent developments, namely the principles of the CanMEDS 2015 update [http://canmeds.royalcollege.ca/en/framework] (which added the topics "Interprofessionalism", "Accountability for the continuity of care", "Patient safety", "Lifelong learning") and the concept of entrustment, conceptualised as entrustable professional activities (EPAs) [20]. EPAs help to delineate residents’ learning paths [21], which we found especially valuable to incorporate in a bottom-up feedback tool, given the discrepancies between trainee and supervisor views on first-year EPAs that have recently been described [22].

Thus, we designed an instrument accommodating these developments to the needs of contemporary graduate medical training in Switzerland. We thoroughly revised SETQsmart by integrating items from the CanMEDS 2015 and the EPA frameworks, while also re-wording items for better application in the Swiss context, as well as removing some items to prevent further inflation of the instrument. The aim of this paper is to introduce SwissSETQ, and to validate the new instrument in the field of anaesthesiology training.

The study was granted exemption by the Ethics Committee of the Canton of Zurich as the study type did not fall under the Swiss Human Research Act (BASEC-Nr. Req-2019-00874).

In this section we first describe the development of the instrument followed by the procedures used for validation. The development process of the instrument is shown in figure 1. The manuscript adheres to the Standards for QUality Improvement Reporting Excellence in Education (SQUIRE-EDU) guidelines [23] as part of the Enhancing the Quality of and Transparency of Health Research (EQUATOR) network for the reporting of studies [24].

Figure 1 Flow-chart of item handling for the final SwissSETQ instrument.

To start from a solid factual basis, we used the well-established SETQsmart instrument [8]. SETQsmart encompasses 28 items across 7 domains of teaching quality: (1) creating a positive learning climate, (2) displaying a professional attitude toward residents, (3) evaluation of residents’ knowledge and skills, (4) feedback to residents, (5) learner centredness, (6) professionalism’ and (7) role modelling. SETQsmart also provides one additional item for global performance and open questions on strengths and on suggestions for teacher improvement. For SETQsmart, high content validity and excellent psychometric properties had been demonstrated (with Cronbach’s alphas above 0.95 for the entire intrstrument and above 0.80 for the subscales) [8, 11].

After translating the SETQsmart questionnaire into German (EvG, APM), an interdisciplinary group of medical education researchers, clinical supervisors and programme directors (APM, MPZ, RS, RT, JBr, SH, RG) revised the content of the instrument. The process followed a non-formalised consensus technique including online collaboration, face-to-face discussions and two large group face-to-face meetings. The final version was approved by consensus of the whole group. To account for the residents’ developmental goals, outlined by the CanMEDS 2015 framework [25], we incorporated the concept of entrustable professional activities (EPAs) [26]. EPAs coherently delineate residents’ learning paths [21] and link these paths to supervisors’ entrustment decisions [27].

In addition to including CanMEDS 2015 and EPAs, a key goal in the revision was to strengthen the formative purpose of the instrument. Whereas the existing items of SETQsmart had mainly described teaching behaviour we wanted to provide more concrete guidance for supervisors and therefore introduced items characterising the desired teaching content (e.g., "speak-up strategies", see item Prof_1, table 2). Items deemed unnecessary or redundant were removed or aggregated to avoid further inflating the original instrument. We agreed to tolerate a 10% increase in items. Finally, we changed the item wording into first-person questions in order to make the questionnaire more specific to the individual perspective of the trainees, ideally enhancing their engagement in the answers. The versions of the instrument were discussed in depth by the expert group after each of two rounds of iteration until final agreement.

In the next step, we presented the final expert version to future users by conducting two "think-aloud" rounds with residents in different years of training at the four centres (‘response process’ [28]). The aim was to ensure proper understanding of the items and the appropriateness of wording for the Swiss-German context. While the residents worked through the questionnaire they were encouraged to speak out aloud what came to their minds. Their comments were discussed subsequently together with suggestions for improvements. The feedback from the think-alouds was used to refine the final version for pilot testing.

The resulting instrument for pilot testing encompassed 34 items. Compared with the original SETQsmart questionnaire, this version included 8 unchanged items, 16 modified items and 10 new items, and 7 items were removed (see table 1, for details see supplemental files 1 and 2 in the appendix). New items addressed the topics/themes "Communication with patients and relatives", "Team communication", "Dealing with errors (one’s own and those of others)+", "Interdisciplinary and interprofessional collaboration", "Ethics and future health system developments", and "Entrustment decisions".

Table 1Overview of altered items within SwisSETQ, compared with SETQsmart.

| Domain | SETQ smart | Left unchanged 1 | Added | Modified | Removed | Swiss SETQ |

| Learning climate / supporting learning2 | 6 | 2 | – | 3 | 1 | 5 |

| Professional (positive2) attitudes towards the learner | 4 | 2 | 1 | – | 2 | 3 |

| Learner centredness / supervision tailored to trainee’s needs2 | 4 | – | 2 | 4 | – | 6 |

| Evaluation of residents’ (trainees’2) knowledge and skills | 4 | – | 3 | 3 | 1 | 6 |

| Feedback to residents/trainees2 | 4 | 1 | – | 3 | – | 4 |

| Professional practice management | 3 | – | 4 | 3 | – | 7 |

| Role modelling | 3 | 3 | - | - | - | 3 |

| Overall rating | 1 | – | – | – | 1 | – |

| Open questions | 2 | – | – | – | 2 | – |

| Total | 31 | 8 | 10 | 16 | 7 | 34 |

1 Item left unchanged, except forchanging to first-person question

2 Title of domain in the SwissSETQ intrument

We assessed (a) the internal structure (factorial composition) of the instrument by exploratory factor analysis, (b) the internal statistical consistency (using Cronbach’s alpha, omega total and greatest lower bound as measures of reliability), and (c) the inter-rater reliability to assess the minimum number of ratings necessary for a valid feedback to one single supervisor using a generalisabilitys study (G study) followed by a decision study (D study).

For assessing the internal structure, the instrument was tested between 1 January and 30 March 2020 in the anaesthesia departments of four major teaching hospitals in Switzerland (Bern University Hospital “Inselspital”, Cantonal Hospital of Lucerne, Cantonal Hospital of Winterthur, University Hospital Zurich). The instrument was distributed to all 220 trainees of the participating institutions at the time of starting the study. All 120 clinical supervisors (faculty) who had responsibility for trainees at these institutions could be provided with feedback. All trainees received an email invitation with an anonymous web-link to the online questionnaire. Participants were provided with information about the nature of the study prior to filling out the questionnaire. The trainees’ task was to rate the teaching quality of the clinical teachers they had worked with. Each item was rated on a seven-point Likert scale ("fully agree", "agree", "partly agree", "neutral", "partly disagree", "disagree", "fully disagree"). Participation was voluntary, and two reminders were sent over a period of four weeks. The ratings were collected via a web-based data collection platform (Survey Monkey, Palo Alto, CA, USA) and subsequently allocated to individual teachers. Teachers were de-identified by using a number code.

The data collected were protected by an individual access secured by a password, and was accessible exclusively to the two principal investigators (APM, JBr). All information that could have identified individual supervisors was coded before data processing.

To confirm that a factor analysis was justified for our given data set, we performed a Kaiser-Meyer-Olkin (KMO) test. The test score can take values from 0 to 1 and should exceed 0.8 to be well acceptable [29]. After having confirmed suitability for factor analysis, we used Bartlett’s test to verify that variances were equal across the sample (assuming a p-value below 0.01 as statistically significant).

Exploratory factor analysis was performed with all 34 items measured on 185 occasions. The factor analysis used the Kaiser criterion (which suggests dropping all components with eigenvalues below 1.0, i.e., if less variance than one single variable is explained). Subsequently, we performed reliability analyses for the total factor score as well as for the items forming the single factors found.

To assess the internal consistency of the instrument and its factors we calculated Cronbach’s alpha (with values of >0.7 regarded as acceptable, >0.8 as good, and >0.9 as excellent). However, Cronbach's alpha tends to underestimate the degree of internal consistency owing to the potentially skewed distribution of the answers in the individual items [30]. Thus, we also report two alternative measures, "omega total" and "greatest lower bound" to the reliability of the test (GLB) [30]. The values derived from the two tests are interpreted in a similar fashion to Cronbach’s alpha. As a further point, we compared the total scores of the instrument between the four institutions for potential differences by means of a one-way ANOVA (analysis of variance).

To investigate the inter-rater reliability for the instrument generalisability theory was used. In generalisability theory two different types of studies are commonly distinguished: G studies and D studies. In a G study the amount of variance associated with the different facets (factors) being examined is quantified according to the data at hand. Based on the data of the G study, a consecutive D study yields information about how to alter the protocol in order to achieve optimal reliability (G coefficient). Here, a G study was performed and the G coefficient was calculated. Based on the result of the G study, the subsequent D study was used to estimate the minimum number of ratings necessary to provide reliable feedback to a single supervisor [31]. A G coefficient above 0.75 was considered sufficient and above 0.8 desirable . The analyses were performed at the question level for supervisors who had received three or more evaluations. For the G study, the total variance of the total score was decomposed into components associated with supervisor (s) and trainees (t) nested (:) within supervisors (s), and crossed (×) with the items (i); supervisors served as the object of measurement and items were set as fixed facet. This (t:s) x i design allows the variance component of two sources to be estimated: (a) the differences between supervisors (object of measurement) and (b) the differences between trainees nested within the judgements on supervisors [32, 33]. In a D study, the reliability indices (G coefficient) and standard error of measurement (SEM) are reported as a function of the number of trainee ratings per supervisor.

Statistical analyses were performed with SPSS for Windows version 26 (IBM, Armonk, NY, USA). The statistical computing language R [34] and variance components for generalisability analysis were calculated using G_String A Windows Wrapper for urGENOVA [35].

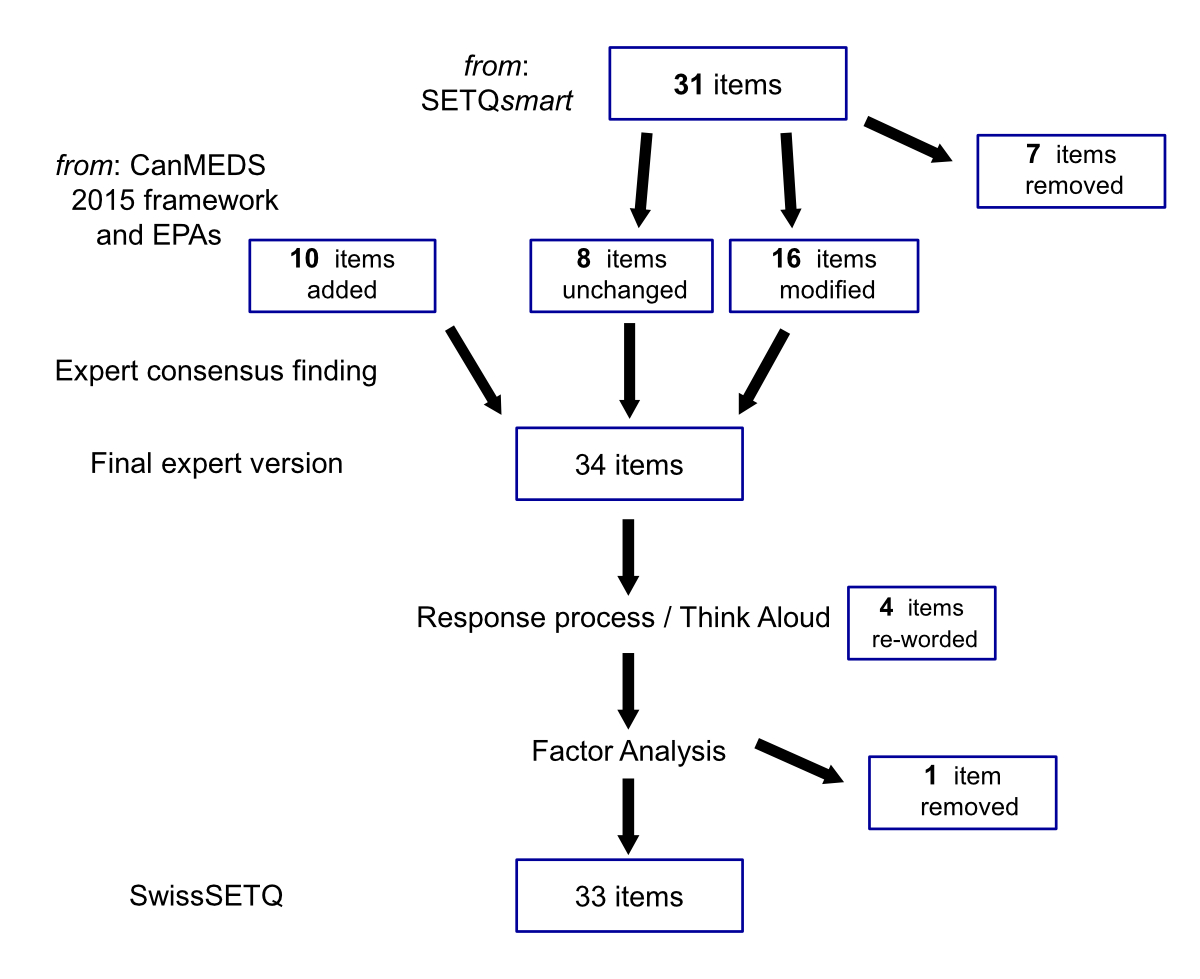

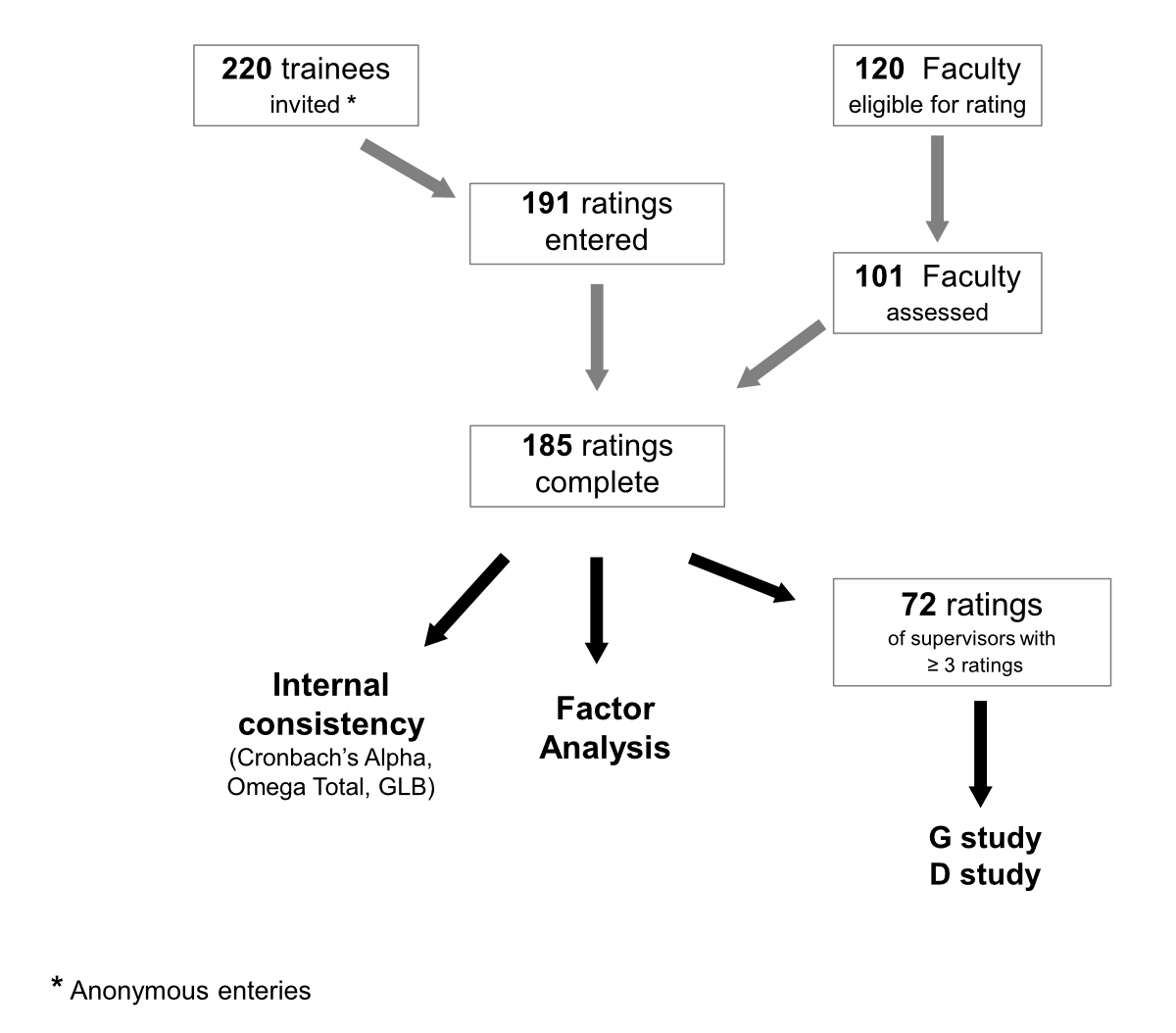

We present the results on the validity of the instrument based on three sources (according to Cook and Beckman) [28]: content validity, response process and internal structure (including exploratory factor analysis, internal consistency, and generalisability analysis). We present an overview of the flow of numbers of ratings in figure 2.

Figure 2 Flow-chart of study design (participants, ratings, and analyses performed).

Using an extensively validated instrument [8] as a starting point we ensured basic content validity. Adding content from the well-founded CanMEDS framework [25, 36–38] further enhanced validity, even more as it has been shown to be easily understood by clinical teachers without background in medical education [39]. Finally, use of the EPA framework [26] strengthens content validity, as it represents the natural developmental paths towards independent clinical practice [40].

Think-alouds with residents led to the rewording of four items (out of 34) and provided the basis for removing one item (LK_5) from the final instrument (the removal of this item was further supported by its low communality in the factor analysis). The number of incomplete ratings in the pilot study was 8 out of 193 (4.1%), reflecting appropriate user friendliness.

Overall, 185 fully completed ratings for 101 clinical teachers were included into statistical analysis. The number of ratings per supervisor ranged from 1 to 9 (average 2), 16 supervisors received 3 or more ratings. For this data set, the suitability for factorial analysis was confirmed by the Kaiser-Meyer-Olkin (KMO) test (p = 0.944) and Bartlett’s test (p <0.001). The exploratory factor analysis identified four factors that explained 72.3% of the total variance: "Individual instruction" (33.8%), "Evaluation of trainee performance" (20.9%), "Teaching professionalism" (12.8%), and "Entrustment decisions" (4.7%). We found double factor loading of eight items (table 2: items LK_4, LK_5, LF_2, Eval_6, FB_1, Prof_1, Prof_2, Prof_5), and a communality below 0.6 for four items (items LK_4, LK_5, LF_5, Eval_6). Consequently, we re-worded one item (LK_4), and removed a second (LK_5). We accepted double factor loading for the remaining seven items, as we found them important in providing formative feedback to supervisors. Factor loadings on the final orthogonally rotated component matrix are shown in table 2.

Table 2Items of the SwissSETQ instrument with communalities and factor loadings of the rotated component matrix.

| Communalities | Rotated component matrix factors | ||||

| 1 * | 2 * | 3 * | 4 * | ||

| Supporting learning | |||||

| LK_1 encourages me to actively participate in discussions | 0.765 | 0.812 | |||

| LK_2 encourages me to bring up unclear points / problems | 0.844 | 0.841 | |||

| LK_3 motivates me for further learning | 0.679 | 0.672 | 0.362 | ||

| LK_4 motivates me to keep up with the current literature | 0.576 | 0.346 | 0.414 | 0.532 | |

| LK_5 prepares him-/herself well for teaching presentations and talks ** | 0.456 | 0.425 | 0.500 | ||

| Positive attitude towards trainees | |||||

| PH_1 actively listens to me | 0.850 | 0.857 | |||

| PH_2 behaves respectfully towards me | 0.831 | 0.898 | |||

| PH_3 demands reasonable efforts from me (to a realistic extent) | 0.761 | 0.811 | |||

| Supervision tailored to trainee’s needs | |||||

| LF_1 sets clear learning goals for my learning activities | 0.755 | 0.438 | 0.688 | ||

| LF_2 adjusts the learning goals to my (learning) needs | 0.692 | 0.516 | 0.614 | ||

| LF_3 gives too much responsibility to me (in relation to my abilities) | 0.843 | 0.918 | |||

| LF_4 gives too little responsibility to me (in relation to my abilities) | 0.762 | -.370 | 0.778 | ||

| LF_5 cares for adaequate supervision | 0.561 | 0.629 | 0.315 | ||

| LF_6 teaches an appropriate balance between self-care and the needs of patients (e.g., adequate work breaks, or providing emergency care just before end of shift) | 0.656 | 0.745 | |||

| Evaluation of trainees’ knowledge and skills (including communication) | |||||

| Eval_1 regularly evaluates my content knowledge | 0.795 | 0.810 | 0.313 | ||

| Eval_2 regularly evaluates my analytical competencies | 0.757 | 0.792 | 0.323 | ||

| Eval_3 regularly evaluates my practical skills | 0.624 | 0.360 | 0.635 | ||

| Eval_4 regularly evaluates my communication skills with patients/family members | 0.635 | 0.740 | |||

| Eval_5 regularly evaluates my communication skills within the team (interprofessional/interdisciplinary) | 0.689 | 0.780 | |||

| Eval_6 regularly performs high quality workplace-based assessments with me (e.g., Mini-CEX, DOPS, etc.) | 0.546 | 0.425 | 0.594 | ||

| Feedback for trainees | |||||

| FB_1 provides regular feedback | 0.657 | 0.523 | 0.557 | ||

| FB_2 provides constructive feedback | 0.789 | 0.784 | 0.377 | ||

| FB_3 explains and substantiates his/her feedback for me | 0.701 | 0.694 | 0.429 | ||

| FB_4 determines the next steps for learning, together with me | 0.776 | 0.453 | 0.739 | ||

| Professional practice management | |||||

| Prof_1 teaches me how to deal with self-committed mistakes | 0.694 | 0.580 | 0.434 | 0.410 | |

| Prof_2 teaches me how to improve the culture of dealing with errors (e.g., «Speak-Up»-techniques) | 0.680 | 0.573 | 0.388 | 0.448 | |

| Prof_3 teaches the principles of interprofessional/interdisciplinary collaboration to me | 0.794 | 0.690 | 0.498 | ||

| Prof_4 raises my awareness of the ethical aspects of patient care | 0.706 | 0.391 | 0.698 | ||

| Prof_5 teaches me the organizational aspects of patient care | 0.713 | 0.478 | 0.655 | ||

| Prof_6 raises my awareness of the economic aspects of patient care (e.g., "choosing wisely") | 0.725 | 0.337 | 0.763 | ||

| Prof_7 raises my awareness of future challenges of the health care system | 0.726 | 0.317 | 0.761 | ||

| Role modelling | |||||

| Vorb_1 is a role model for me as a supervisor / teacher | 0.901 | 0.856 | 0.315 | ||

| Vorb_2 Is a role model to me as a physician | 0.862 | 0.818 | 0.301 | 0.302 | |

| Vorb_3 Is a role model to me as a person | 0.774 | 0.812 | |||

Factor loadings of items incorprated in a factor (and used for measurement of internal consistency) are highlighted in bold with grey background (bold italics, if factor loading was below 0.6); factor denomination and legend of item codes, see below.

Legend of item codes: LK_1 to LK_5: Lernklima (learning climate); PH_1 to PH_3: Professionelle Haltung gegenüber Weiterbildungs-asssitent/in (professional attitude towards trainees); LF_1 to LF_6: Lernförderliche Haltung (learner centredness); Eval_1 to Eval_6: Evaluation der Leistung (evaluation of trainees); FB_1 to FB_4: Feedback (feedback); Prof_1 to Prof_7: Professionalität (teaching professionalism); Vorb_1 to Vorb_3: Vorbildfunktion (role modelling)

* Factor 1: Individual instruction; factor 2: Evaluation of trainee’s performance; factor 3: Teaching professionalism; factor 4: Entrustment decisions

** Item not included in the final instrument (removed, due to double factor loading, low communality, and feedback from the "response process" [think alouds])

Comparing the total scores between the four institutions did not reveal any statistically significant differences (1-way-ANOVA: 3.181 = 0.706; p = 0.550).

Internal consistency was calculated on the basis of the remaining 33 items (after factor analysis). Cronbach’s alpha for the total scale was 0.964 (95% confidence interval [CI] 0.956–0.971), and omega total was 0.981 (95% CI 0.977–0.985) while the greater lower bound was 0.983 (no 95% CI). Subscales ranged from 0.718 to 0.974 for Cronbach’s alpha and 0.718 to 0.982 for omega total. Further details are summarised in table 3 for both runs.

Table 3Measurements of internal consistency for total and factor scores.

| 95% confidence interval | |||||

| No. Items | Statistic | Value | Lower bound | Upper bound | |

| Total | 33 | Cronbach's alpha | 0.964 | 0.956 | 0.971 |

| Omega total | 0.981 | 0.977 | 0.985 | ||

| GLB * | 0.983 | - | - | ||

| Factor 1 ** | 16 | Cronbach's alpha | 0.974 | 0.968 | 0.979 |

| Omega total | 0.982 | 0.978 | 0.986 | ||

| GLB * | 0.977 | - | - | ||

| Factor 2 ** | 10 | Cronbach's alpha | 0.938 | 0.924 | 0.951 |

| Omega total | 0.957 | 0.947 | 0.966 | ||

| GLB * | 0.963 | - | - | ||

| Factor 3 ** | 5 | Cronbach's alpha | 0.883 | 0.854 | 0.908 |

| Omega total | 0.908 | 0.885 | 0.927 | ||

| GLB * | 0.911 | - | - | ||

| Factor 4 ** | 2 | Cronbach's alpha | 0.718 | 0.623 | 0.789 |

GLB: greater lower bound

* The 95% confidence interval can only be displayed for Cronbach’s Alpha and Omega Total.

** Factor 1: Individual instruction; factor 2: Evaluation of trainee’s performance; factor 3: Teaching professionalism; factor 4: Entrustment decisions

To analyse inter-rater reliability, a total of 72 ratings entered the analysis for the 16 supervisors who had received three or more ratings. Trainee ratings (t) were nested within supervisors (s) and crossed with the 33 items remaining after factor analysis (i). The G study revealed an inter-rater reliability of 0.746 with a mean of 3.93 ratings per supervisor. In the D study that followed, the inter-rater reliability coefficients were estimated for the number of ratings per clinical teacher. Table 4 shows the results of the G study and the D study. The D study revealed that three ratings were enough to reach a generalizability coefficient of roughly 0.7 and a minimum of five to six individual ratings was necessary to reliably assess one clinical teacher.

Table 4G study and D study on inter-rater reliability.

| n | Inter-supervisor variance | Rater variance within supervisor | G coefficient | SEM | |

| G study | 3.93 | 0.402 | 0.137 | 0.746 | 0.482 |

| D study | 2 | 0.402 | 0.269 | 0.600 | 0.605 |

| 3 | 0.179 | 0.692 | 0.531 | ||

| 4 | 0.134 | 0.750 | 0.478 | ||

| 5 | 0.107 | 0.789 | 0.439 | ||

| 6 | 0.090 | 0.818 | 0.408 | ||

| 7 | 0.077 | 0.840 | 0.383 |

SEM: standard error of the measurement

In this paper, we present the new SwissSETQ instrument for providing bottom-up feedback to clinical teachers. The instrument integrates recent developments in graduate medical eduction into a well-established existing tool, and also strengthens the formative purpose of the tool. We found very good to excellent properties for all three sources of validity (according to Cook and Beckman) [28]: internal structure (including factorial composition, internal consistency, and inter-rater reliability), content validity, and response process.

KMO test and Bartlett’s test revealed that exploratory factor analysis was well justified with a sample size of n = 185. The factorial analysis identified four factors that explained more than 70% of the total variance (Individual instruction; Evaluation of trainees; Teaching professionalism; Entrustment decisions). This stands in contrast to the six factors of SETQsmart. Our factor analysis showed that the newly introduced themes clearly changed the initial structure of SETQsmart, underlining the importance of statistically validating the new instrument. The difference in factors may be explained by the overlap of factor loading between the domains; in particular, factor 1 (Individual instruction) is related to the sections Supporting learning, Positive attitude towards the learner, and Supervision tailored to trainee’s needs, as well as to Role modelling. Although factorial analysis identified these four factors, we kept the seven thematic sections of the original SwissSETQsmart instrument to give the questionnaire a more organised structure.

Based on double factor loading and low communalities, we re-worded one item and removed another one. However, we accepted double factor loading for seven items because we found them important in providing formative feedback, and thereby in shaping teaching behaviour. In keeping these items, we prioritised the formative developmental aspect of the instrument even though some aspects of teaching quality may thus be statistically overrepresented.

One remarkable finding of the factor analysis was that we could not find significant differences in the total scores of the instrument between the four institutions. Given the rather low sample sizes from the individual institutions, this consistency further reflects the excellent statistical properties of the instrument.

All analyses of internal consistency showed excellent results. As expected, omega total and the greatest lower bound, both revealed higher values than Cronbach’s alpha [30]. Internal consistency was further demonstrated by the fact that each subscale of the four factors from factorial analysis showed the same effect. The only low value we found was for the subscale, Entrustment decisions. This factor, however, was composed of only two items and is therefore unlikely to show high values.

For inter-rater reliability, G study and D study analysis of 72 ratings for 16 supervisors revealed acceptable inter-rater reliability coefficients with a minimum of five to six individual ratings to reliably assess one supervisor. This favourable inte-rrater reliability is not too surprising given that the underlying factors are well measured. This finding is also in line with results for similar instruments measuring teaching quality [8, 15]. In the eyes of clinical teachers, this will enhance the credibility of SwissSETQ as a reliable feedback tool. Because users seek credibility, we find it important to prove such sound statistical properties for instruments such as the SwissSETQ, even if it has been pointed out that such instruments should not be mistaken for summative assessments of teaching quality [40].

The high content validity is underpinned by the foundation of the SwissSETQ in a well-validated preceding instrument [8] and by the widely applied and easily understood frameworks, CanMEDS 2015 [25, 39] and EPAs [26]. The two latter frameworks closely link the instrument to clinical practice and to the developmental goals of residents. Introduction of fundamental clinical concepts such as patient safety or interprofessionalism provides supervisors with explicit strategies to align their teaching with the needs of future health care.This connection is paramount for making the instrument useful to both the residents applying it and the supervisors receiving the feedback [22].

Think-alouds with residents supported the high quality of the tool, even before items were reworded. Still, more than 10% of the items were further improved by this process, thus underlining the value of refining such questionnaires through the input of future users. A further indication of a consistent response process was the very low portion of incomplete ratings in the pilot study, suggesting the questionnaires may have been completed with high engagement. However, to explore this question in depth a qualitative approach would be necessary.

As the first and major limitation, the validation measures are explanatory only and do not compare the properties of the instrument with an established standard. A claim for advancement of this feedback instrument can only be based on the underpinning constructs of CanMEDS 2015 and EPAs.

Second, only one medical specialty was involved in this pilot. Application to other medical specialties remains to be established. Studies in this respect appear feasible since all items of SwissSETQ were formulated so as to be applicable in all clinical specialties. A third limitation may be seen in respect to the levels of teaching expertise of supervisors, which we were unable to assess. Values of the scales might be skewed according to variations in expertise. However, with 101 out of 120 supervisors we reached a high inclusion rate and therefore the distribution of expertise levels might not be too far away from real life. Similar to the supervisors, we have no data on the trainees’ years of training (it has been shown that views on educational progress may vary by years of training [42]). However, the perhaps more prominent confounder is a selection bias due to voluntary participation. The current scale might be shifted towards more positive values, as trainees who participated may have chosen to rate higher-valued or favourite supervisors. Confirming the instrument in a setting without a self-selection bias is crucial.

With the SwissSETQ instrument we provide a reliable bottom-up feedback instrument with sufficient credibility to supervisors. A reasonably low number of five to six ratings is sufficient for reliable ratings. Integrating recent concepts of graduate medical education (patient safety, patient centredness, interprofessionalism and entrustment decisions) aligns the instrument with the desired standards of health care in Switzerland. Finally, we strengthened the formative feedback component of that tool by explicitly describing concrete teaching content (such as "speak-up" techniques, or "dealing with errors"). Our study provides the necessary foundation to support application of this tool on a larger scale. The effects on teaching quality remain to be investigated.

The original, anonymised data can be provided upon reasonable request at the authors.

None (academic study)

All authors have completed and submitted the International Committee of Medical Journal Editors form for disclosure of potential conflicts of interest. No potential conflict of interest was disclosed.

We want to thank Prof. Elisabeth van Gessel (EvG), University Hospital Geneva, for her support when revising the SwisSETQ pilot version prior for content and wording as well as for providing a first translation of the SETQsmart instrument.

Individual contributions: JBr, APM, MPZ and SH were involved in the study design; DS was responsible for the statistical analysis in dialogue with JBr; APM, MPZ, RS, RT, JBr, SH, and RG contributed to the consensus finding process for the content of the instrument; APM, JBr, NS, RT, and JBe conducted the Think-Aloud sessions with trainees; JBr and APM prepared the first draft of the manuscript; all authors revised the manuscript and approved its final version.

1. Davis DA . Reengineering Medical Education. in Gigerenzer G, Gray JA (Ed). Better doctors, better patients, better decisions: Envisioning health care 2020. Boston, MA 2011 (The MIT Press), p.243-64.

2. Van Der Leeuw RM , Boerebach BC , Lombarts KM , Heineman MJ , Arah OA . Clinical teaching performance improvement of faculty in residency training: A prospective cohort study. Med Teach. 2016 May;38(5):464–70. https://doi.org/10.3109/0142159X.2015.1060302

3. Steinert Y , Mann K , Anderson B , Barnett BM , Centeno A , Naismith L , et al. A systematic review of faculty development initiatives designed to enhance teaching effectiveness: A 10-year update: BEME Guide No. 40. Med Teach. 2016 Aug;38(8):769–86. https://doi.org/10.1080/0142159X.2016.1181851

4. Schuwirth LW , Van der Vleuten CP . Programmatic assessment: from assessment of learning to assessment for learning. Med Teach. 2011;33(6):478–85. https://doi.org/10.3109/0142159X.2011.565828

5. Kember D , Leung DY , Kwan K . Does the use of student feedback questionnaires improve the overall quality of teaching? Assess Eval High Educ. 2002;27(5):411–25. https://doi.org/10.1080/0260293022000009294

6. Richardson JT . Instruments for obtaining student feedback: A review of the literature. Assess Eval High Educ. 2005;30(4):387–415. https://doi.org/10.1080/02602930500099193

7. Fluit CR , Bolhuis S , Grol R , Laan R , Wensing M . Assessing the quality of clinical teachers: a systematic review of content and quality of questionnaires for assessing clinical teachers. J Gen Intern Med. 2010 Dec;25(12):1337–45. https://doi.org/10.1007/s11606-010-1458-y

8. Lombarts KM , Ferguson A , Hollmann MW , Malling B , Arah OA , Arah OA ; SMART Collaborators . Redesign of the System for Evaluation of Teaching Qualities in Anesthesiology Residency Training (SETQ Smart). Anesthesiology. 2016 Nov;125(5):1056–65. https://doi.org/10.1097/ALN.0000000000001341

9. Lombarts MJ , Bucx MJ , Rupp I , Keijzers PJ , Kokke SI , Schlack W . [An instrument for the assessment of the training qualities of clinician-educators]. Ned Tijdschr Geneeskd. 2007 Sep;151(36):2004–8.

10. van der Leeuw R , Lombarts K , Heineman MJ , Arah O . Systematic evaluation of the teaching qualities of Obstetrics and Gynecology faculty: reliability and validity of the SETQ tools. PLoS One. 2011 May;6(5):e19142. https://doi.org/10.1371/journal.pone.0019142

11. Boerebach BC , Lombarts KM , Arah OA . Confirmatory Factor Analysis of the System for Evaluation of Teaching Qualities (SETQ) in Graduate Medical Training. Eval Health Prof. 2016 Mar;39(1):21–32. https://doi.org/10.1177/0163278714552520

12. Bowling A . Quantitative social science: the survey. In Bowling A, Ebrahim S (eds). Handbook of Health Research Methods: Investigation, Measurement & Analysis. New York 2005 (McGraw-Hill), pp.190-214.

13. Lietz P . Research into Questionnaire Design: A Summary of the Literature. Int J Mark Res. 2010;52(2):249–72. https://doi.org/10.2501/S147078530920120X

14. Skeff KM . Evaluation of a method for improving the teaching performance of attending physicians. Am J Med. 1983 Sep;75(3):465–70. https://doi.org/10.1016/0002-9343(83)90351-0

15. Litzelman DK , Westmoreland GR , Skeff KM , Stratos GA . Factorial validation of an educational framework using residents’ evaluations of clinician-educators. Acad Med. 1999 Oct;74(10 Suppl):S25–7. https://doi.org/10.1097/00001888-199910000-00030

16. Frank JR . The CanMEDS 2005 Physician Competency Framework. Better Standards. Better Physicians. Better Care. Ottawa 2005. The Royal College of Physicians and Surgeons of Canada.

17. Swing SR . The ACGME outcome project: retrospective and prospective. Med Teach. 2007 Sep;29(7):648–54. https://doi.org/10.1080/01421590701392903

18. Frenk J , Chen L , Bhutta ZA , Cohen J , Crisp N , Evans T , et al. Health professionals for a new century: transforming education to strengthen health systems in an interdependent world. Lancet. 2010 Dec;376(9756):1923–58. https://doi.org/10.1016/S0140-6736(10)61854-5

19. Srinivasan M , Li ST , Meyers FJ , Pratt DD , Collins JB , Braddock C , et al. “Teaching as a Competency”: competencies for medical educators. Acad Med. 2011 Oct;86(10):1211–20. https://doi.org/10.1097/ACM.0b013e31822c5b9a

20. ten Cate O . Entrustability of professional activities and competency-based training. Med Educ. 2005 Dec;39(12):1176–7. https://doi.org/10.1111/j.1365-2929.2005.02341.x

21. Jonker G , Hoff RG , Ten Cate OT . A case for competency-based anaesthesiology training with entrustable professional activities: an agenda for development and research. Eur J Anaesthesiol. 2015 Feb;32(2):71–6. https://doi.org/10.1097/EJA.0000000000000109

22. Marty AP , Schmelzer S , Thomasin RA , Braun J , Zalunardo MP , Spahn DR , et al. Agreement between trainees and supervisors on first-year entrustable professional activities for anaesthesia training. Br J Anaesth. 2020 Jul;125(1):98–103. https://doi.org/10.1016/j.bja.2020.04.009

23. Ogrinc G , Armstrong GE , Dolansky MA , Singh MK , Davies L . SQUIRE-EDU (Standards for QUality Improvement Reporting Excellence in Education): Publication Guidelines for Educational Improvement. Acad Med. 2019 Oct;94(10):1461–70. https://doi.org/10.1097/ACM.0000000000002750

24. EQUATOR network . Accessed August 16, 2021. https://www.equator-network.org/

25. Frank JR , Snell L , Sherbino J , editors . CanMEDS 2015 Physician Competency Framework. Ottawa 2015 (Royal College of Physicians and Surgeons of Canada).

26. Ten Cate O , Chen HC , Hoff RG , Peters H , Bok H , van der Schaaf M . Curriculum development for the workplace using Entrustable Professional Activities (EPAs): AMEE Guide No. 99. Med Teach. 2015;37(11):983–1002. https://doi.org/10.3109/0142159X.2015.1060308

27. Breckwoldt J , Beckers SK , Breuer G , Marty A . [Entrustable professional activities : promising concept in postgraduate medical education] [German]. Anaesthesist. 2018 Jun;67(6):452–7. https://doi.org/10.1007/s00101-018-0420-y

28. Cook DA , Beckman TJ . Current concepts in validity and reliability for psychometric instruments: theory and application. Am J Med. 2006 Feb;119(2):166.e7–16. https://doi.org/10.1016/j.amjmed.2005.10.036

29. Kaiser HF , Rice J . Little Jiffy, Mark IV. Educ Psychol Meas. 1974;34(1):111–7. https://doi.org/10.1177/001316447403400115

30. Revelle W , Zinbarg RE . Coefficients alpha, beta, omega and the glb: comments on Sijtsma. Psychometrika. 2009;74(1):145–54. https://doi.org/10.1007/s11336-008-9102-z

31. Shavelson RJ , Webb NM , Rowley GL . Generalizability theory. Am Psychol. 1989;44(6):922–32. https://doi.org/10.1037/0003-066X.44.6.922

32. Shavelson RJ , Webb NM . Generalizability theory: A primer. Thousand Oaks, Ca. 1991 (Sage).

33. Brennan RL . Generalizability theory. J Educ Meas. 2003;40(1):105–7. https://doi.org/10.1111/j.1745-3984.2003.tb01098.x

34. R Core Team . R: A language and environment for statistical computing. R Foundation for Statistical Computing, Vienna, Austria 2018. URL https://www.R-project.org/

35. G_String 2019] A Windows Wrapper for urGENOVA [cited 2019 12 September]. Available from: http://fhsperd.mcmaster.ca/g_string/index.html)

36. Neufeld VR , Maudsley RF , Pickering RJ , Turnbull JM , Weston WW , Brown MG , et al. Educating future physicians for Ontario. Acad Med. 1998 Nov;73(11):1133–48. https://doi.org/10.1097/00001888-199811000-00010

37. CanMEDS . CanMEDS 2000: Extract from the CanMEDS 2000 Project Societal Needs Working Group Report. Med Teach. 2000;22(6):549–54. https://doi.org/10.1080/01421590050175505

38. Frank JR , Danoff D . The CanMEDS initiative: implementing an outcomes-based framework of physician competencies. Med Teach. 2007 Sep;29(7):642–7. https://doi.org/10.1080/01421590701746983

39. Jilg S , Möltner A , Berberat P , Fischer MR , Breckwoldt J . How do Supervising Clinicians of a University Hospital and Associated Teaching Hospitals Rate the Relevance of the Key Competencies within the CanMEDS Roles Framework in Respect to Teaching in Clinical Clerkships? GMS Z Med Ausbild. 2015 Aug;32(3):Doc33.

40. Ten Cate O . When I say … entrustability. Med Educ. 2020 Feb;54(2):103–4. https://doi.org/10.1111/medu.14005

41. Schuwirth LW . [Evaluation of students and teachers]. Ned Tijdschr Geneeskd. 2010;154:A1677.

42. Marty AP , Schmelzer S , Thomasin RA , Braun J , Zalunardo MP , Spahn DR , et al. Agreement between trainees and supervisors on first-year entrustable professional activities for anaesthesia training. Br J Anaesth. 2020 Jul;125(1):98–103. https://doi.org/10.1016/j.bja.2020.04.009

The appendix files are available in the PDF version of this manuscript.