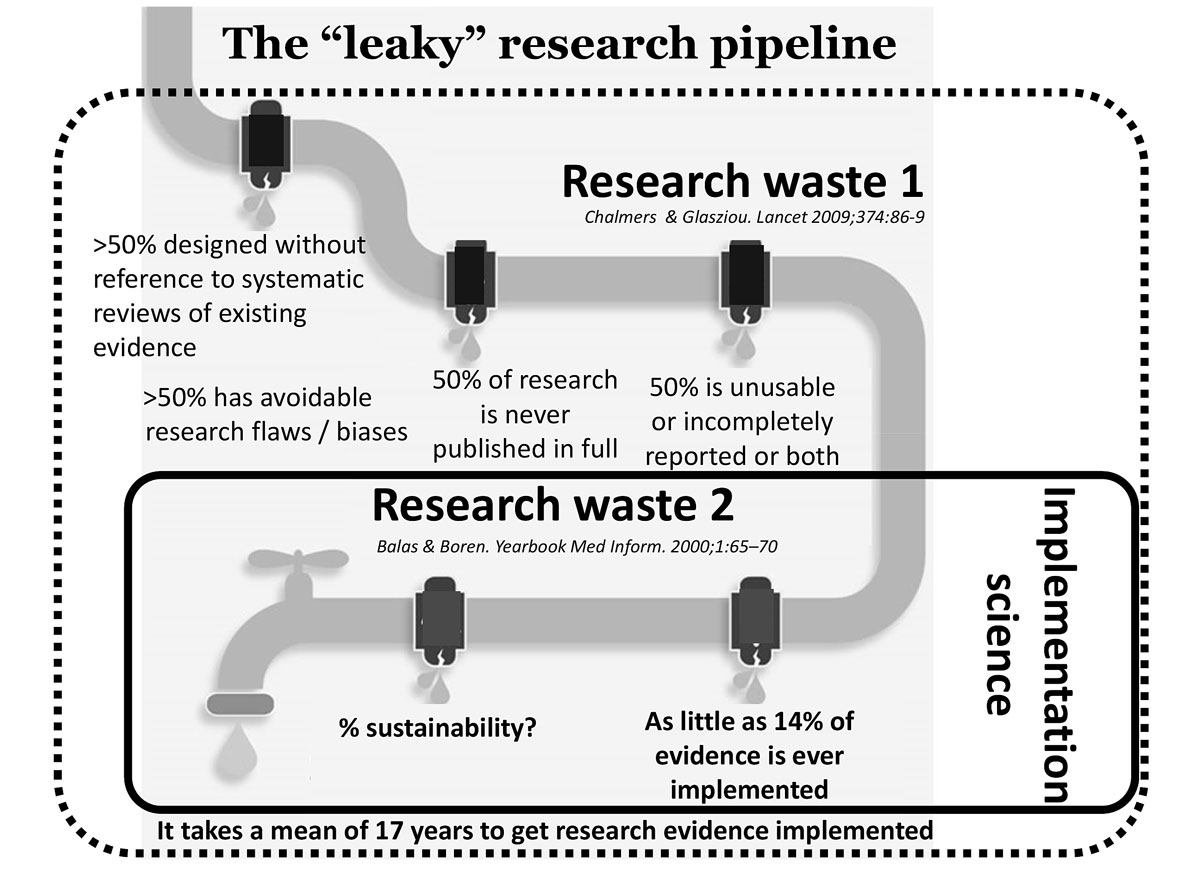

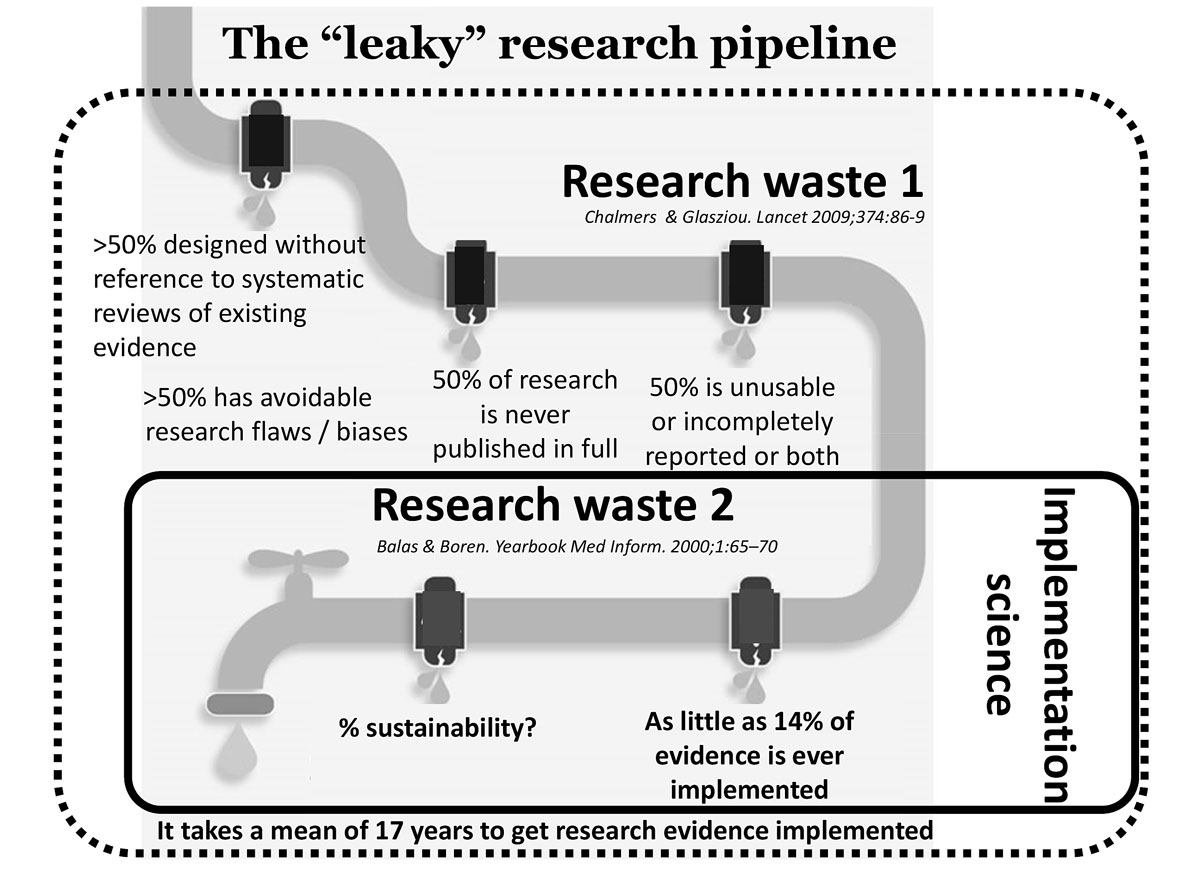

Figure 1 The leaky research pipeline: positioning research waste 1 and research waste 2.

DOI: https://doi.org/10.4414/smw.2020.20323

A 2019 Swiss Academy of Medical Science (SAMS) bulletin addressed the challenge of translational research: the enormous rift between basic scientific discoveries and their use in clinical therapies [1]. The report termed this distance “the valley of death”.

The same rift also applies to clinical research and the implementation of related health policies or innovations into routine health services. Achieving the Swiss Federal Council’s health policy strategy 2020–2030 [2] and the United Nations 2030 Agenda for Sustainable Development, comprising the 17 sustainable development goals (SDGs) [3], will mean embracing new approaches and following paths that will speed up the translation and take-up of evidence into real-world settings. Also in 2019, a report on improving patient safety and the quality of care in the Swiss healthcare system called for investment in implementation science as a critical path forward [4].

The valley of death is littered with well-researched, evidence-based programmes, practices, procedures, products and policies developed by health scientists and now desiccating on bookshelves, waiting to be translated into real-world settings. Meanwhile, an estimated 30–40% of patients do not receive treatments of proven efficacy, and 20–25% receive unnecessary or potentially harmful treatments [5].

Balas and Boren showed that as little as 14% of published evidence is translated into clinical practice. The mean wait between innovation and application is 17 years [6]. Implementation deficits contribute to excess research waste.

We differentiate between two types of research waste (fig. 1): “research waste 1” refers to what an influential Lancet paper [7] described as research designed without reference to systematic reviews of the existing evidence, research not published in full, studies with avoidable research flaws and/or studies that are unusable, incompletely reported, or both. This type of waste results in a low proportion of the research initiated eventually resulting in high-quality scientific evidence.

Figure 1 The leaky research pipeline: positioning research waste 1 and research waste 2.

“Research waste 2” refers to the lack of effective and sustainable translation and implementation of evidence-based innovations from the trial world into daily clinical practice. The underperformance of the so-called “research pipeline” (fig. 1) is simply staggering.

Various measures to reduce research waste 1 have been implemented, with some success. Prominent examples include the obligation to register studies, widespread investment in clinical research infrastructures such as clinical trial units, and guidelines supporting the quality of scientific reporting. While these and related measures have helped raise the quality of randomised controlled trials [8], more are needed.

After all, even well-conducted, well-reported studies still need to cross that wasteland between the trial world and real-world settings to guarantee the successful implementation and sustainability of evidence-based innovations. To guide their translation into real-world settings, an increased focus on “implementation science” – a combination of methodological approaches that focus primarily on research waste 2 – should be added early in the research process.

Implementation science is “the scientific study of methods to promote the systematic uptake of research findings and other evidence-based practices into routine practice, and, hence, to improve the quality and effectiveness of health services and care” [9]. First, though, it is a science, and therefore needs to be differentiated from quality improvement. Conducting an implementation science study implies not only a scientific evaluation of the effectiveness of an intervention in a real-world setting (i.e., pragmatic trials), but also an evaluation of how and why it either works or fails in the specific context in which it was tried.

Evidence generated on effectiveness outcomes and from the evaluation of the implementation pathway, including an assessment of implementation outcomes, can subsequently be transferred to other contexts (whether similar or different) to support a more efficient implementation and/or scaling up of an intervention. Early attention to implementation aspects relating to the research pipeline – optimally proof-of-concept and efficacy trials – has the potential to shorten the time from discovery to implementation.

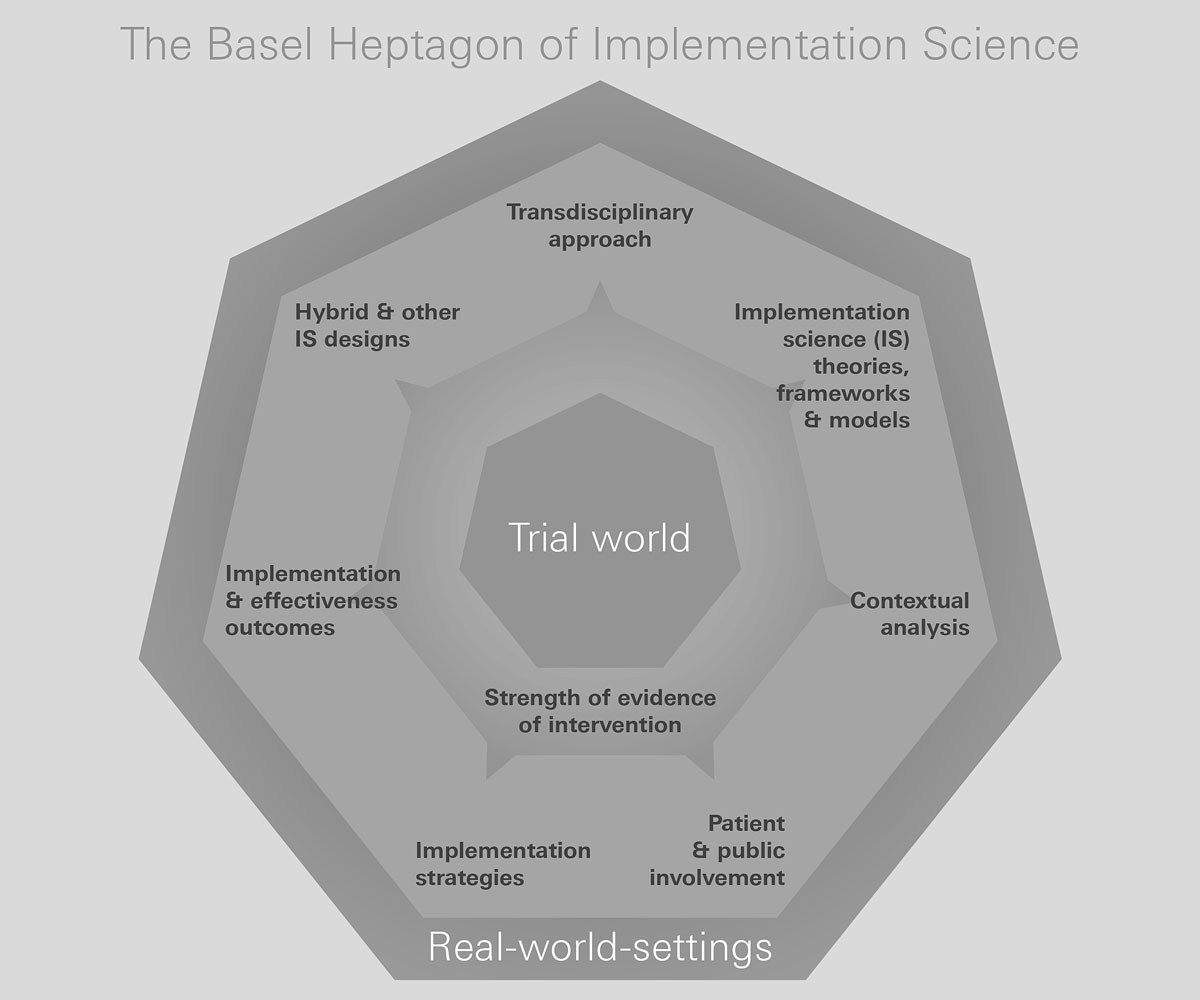

While implementation science builds on existing research principles and methods, its focus is on external validity. Therefore, it is attentive to the additional complexities that characterise real-world contexts. Starting with the strength of the evidence, implementation science requires the integration of seven specific considerations (fig. 2) [10]. (1) “Patient and public involvement” requires involving all relevant stakeholders in all stages of the project. (2) “Contextual analysis” allows researchers to better understand and map the relevant characteristics of the setting in which the intervention will be implemented. Contextual information contributes to effective intervention co-design and informs the choice of contextually relevant implementation strategies. (3) Implementation science-specific “theoretical frameworks” guide either parts or all of an implementation science study. (4) “Implementation strategies” facilitate the adoption, implementation, sustainability and scaling up of specific interventions, programmes or practices. (5) “Effectiveness” (e.g., healthcare utilisation, survival and medication adherence) and “implementation outcomes” (e.g., feasibility, acceptability reach and implementation cost) are measured concurrently. (6) “Implementation science-specific designs”, such as hybrid designs, combine the evaluation of an intervention’s effectiveness and the outcomes of implementation efforts (with the implementation pathway typically analysed using a mixed-methods approach). (7) As diverse competencies are needed, implementation research is typically conducted by “transdisciplinary research teams”, where the complementary skill sets of implementation scientists are aligned with the knowledge and skills of other team members, including policy- and decision-makers.

Figure 2 Key components of implementation science.

Over the past decade, implementation science has gained traction worldwide as a valuable scientific approach. However, it has not yet been broadly embraced in Swiss health sciences research. While implementation science has been used for some time by Swiss public health researchers for health system-strengthening projects in the south and the east (e.g., within the Swiss Programme for Research on Global Issues and Development), the Swiss National Science Foundation (SNSF) has only recently funded its first implementation science programmes under the umbrella of its National Research Programme 74.

Also, to strengthen and foster recognition of implementation science in Switzerland, the Swiss Implementation Science Network (IMPACT) was recently launched. IMPACT pursues four major aims: (1) to showcase implementation science healthcare projects conducted by Swiss healthcare researchers and institutions; (2) to provide networking opportunities for implementation science researchers and other interested stakeholders in Switzerland; (3) to provide implementation science training opportunities; and (4) to leverage funding options for implementation science in Switzerland. It is hoped that IMPACT will function as a catalyst to advance the effective translation and implementation of evidence-based interventions, programmes and policies, both in Switzerland and beyond. IMPACT is also expected to stimulate approaches to integrating implementation science expertise into the early stages of clinical trials as part of research infrastructures.

In our view, boosting the performance of the Swiss healthcare system requires bridging the “valley of death”, which will involve increasing the research capacity for implementation science. First, implementation science needs to be recognised as an essential part of a high-performing research enterprise, with high societal returns on investment. Second, as implementation science projects require competencies beyond the traditional clinical research methods, researchers need opportunities both to develop these competencies and to learn the principles of implementation science. Third, implementation scientists should be involved early on in the design of clinical research projects: this will potentially not only shorten the time to routine use of evidence-based interventions, but also enhance their sustainability after successful implementation. Fourth, rigorous methods of attracting and developing stakeholder involvement in projects can be fostered through the Swiss EUPATI National Platform, among other initiatives. Fifth, adequate funding mechanisms must be established to help fund implementation science projects. While implementation science already promises to make clinical research far more cost-effective – particularly by shortening the time to routine use of results – the complexities of implementation science studies (e.g., use of contextual analysis, stakeholder involvement, implementation strategies) need to be reflected in the funding mechanisms.

Finally, strategies to apply implementation science methods to Swiss health research offer an excellent return on investment. Designing studies specifically to overcome translation barriers promises to remove years from the current research process. This, in turn, will maximise the entire Swiss research enterprise’s value for patients and populations. In effect, it will bridge the valley of death.

No financial support and no other potential conflict of interest relevant to this article was reported.

1 Scheidegger D . Medizinischer Fortschritt: Warum verläuft dieTranslation biologischer Erkenntnisse in neue Therapien so schleppend? SAMW Bulletin. 2019;3:1–2.

2Health2030 – the Federal Council’s health policy strategy for the period 2020–2030. Available from: https://www.bag.admin.ch/bag/en/home/strategie-und-politik/gesundheit-2030/gesundheitspolitische-strategie-2030.html [cited 2020 May 12].

3United Nations. About the sustainable development goals. Available from: https://www.un.org/sustainabledevelopment/sustainable-development-goals/ [cited 2020 May 12].

4Vincent C, Staines A. Enhancing the Quality and Safety of Swiss Healthcare. Bern: Federal Office of Public Health. 2019.

5 McGlynn EA , Asch SM , Adams J , Keesey J , Hicks J , DeCristofaro A , et al. The quality of health care delivered to adults in the United States. N Engl J Med. 2003;348(26):2635–45. doi:.https://doi.org/10.1056/NEJMsa022615

6 Balas EA , Boren SA . Managing Clinical Knowledge for Health Care Improvement. Yearb Med Inform. 2000;09(1):65–70. doi:.https://doi.org/10.1055/s-0038-1637943

7 Chalmers I , Glasziou P . Avoidable waste in the production and reporting of research evidence. Lancet. 2009;374(9683):86–9. doi:.https://doi.org/10.1016/S0140-6736(09)60329-9

8 von Niederhäusern B , Magnin A , Pauli-Magnus C . The impact of clinical trial units on the value of clinical research in Switzerland. Swiss Med Wkly. 2018;148:w14615. doi:.https://doi.org/10.4414/smw.2018.14615

9 Eccles MP , Mittman BS . Welcome to Implementation Science. Implement Sci. 2006;1(1):1. doi:.https://doi.org/10.1186/1748-5908-1-1

10 Neta G , Brownson RC , Chambers DA . Opportunities for epidemiologists in implementation science: A primer. Am J Epidemiol. 2018;187(5):899–910. doi:.https://doi.org/10.1093/aje/kwx323

No financial support and no other potential conflict of interest relevant to this article was reported.