Diagnostic errors and flaws in clinical reasoning: mechanisms and prevention in practice

DOI: https://doi.org/10.4414/smw.2012.13706

Mathieu

Nendaz, Arnaud

Perrier

Summary

Diagnostic errors account for more than 8% of adverse events in medicine and up to 30% of malpractice claims. Mechanisms of errors may be related to the working environment but cognitive issues are involved in about 75% of the cases, either alone or in association with system failures. The majority of cognitive errors are not related to knowledge deficiency but to flaws in data collection, data integration, and data verification that may lead to premature diagnostic closure. This paper reviews some aspects of the literature on cognitive psychology that help us to understand reasoning processes and knowledge organisation and summarises biases related to clinical reasoning. It reviews the strategies described to prevent cognitive diagnostic errors. Many approaches propose awareness and reflective practice during daily activities, but the improvement of the quality of training at the pre-graduate, postgraduate and continuous levels, by using evidence-based education, should also be considered. Several conditions must be fulfilled to increase the understanding, the prevention, and the correction of diagnostic errors related to clinical reasoning: physicians must be willing to understand their own reasoning and decision processes; training efforts should be provided during the whole continuum of a clinician’s career; and the involvement of medical schools, teaching hospitals, and medical societies in medical education research should be increased to improve evidence about error prevention.

Introduction

A 36 year-old man working in house construction consulted an emergency centre because of 10 days of fatigue, occipital headaches, neck pain, and fever. Two months earlier, he wounded himself on the left forearm and treated it himself. On physical examination, his pulse was 88/min, blood pressure 135/75 mm Hg, temperature 38.2 °C. There was an erythematous scar on the left forearm. He was alert and presented no focal neurological deficits. His neck was stiff and painful, not only on flexion but also on palpation of the spine. A meningitis was suspected and a lumbar puncture was performed, showing: leucocytes 50/field, 94% lymphocytes, normal glucose, proteins 0.65 g/l. Viral meningitis was considered the main diagnosis and the patient was admitted to the ward for observation and analgesic therapy. This occurred on a Friday afternoon.

During the following week-end, the neck pain remained intense. The resident on duty took the patient’s history again and learned that the patient had already some neck pain for the past 3 weeks, attributed to his professional activities, with episodes of fever, chills, and occasional paresthesias of both hands. On physical examination, temperature was 38.5 °C, and the neck was very stiff with a local, intense pain at palpation. A cervical infectious process, potentially in relationship with the forearm wound, was suspected and imagery was ordered. An MRI of the cervical spine and bacteriological samples eventually confirmed the diagnosis of cervical S. aureus osteomyelitis and paracervical abscess.

What happened during the work-up of this case, leading the first medical team to consider a wrong diagnostic hypothesis? The diagnosis of spinal osteomyelitis is often difficult and may take several days. Nevertheless, this case illustrates many of the pitfalls and biases that may affect any clinician during reasoning process. Understanding the flaws in clinical reasoning that may lead to diagnostic errors is the aim of this paper. We will also review some of the proposed solutions for avoiding reasoning pitfalls. Since we focus on thinking processes, our definition of error is broad and does not necessarily imply harm to the patient or malpractice claim.

Frequency and causes of diagnostic errors

Several studies have shown that patients and their physicians consider medical errors, and particularly diagnostic errors, common and potentially harmful. For instance, Blendon et al. [1] surveyed patients and physicians on their perception that they (or a member of their family) had experienced medical errors leading to serious harm or treatment changes. Thirty-five percent of physicians and 42% of patients reported such errors. Diagnostic errors accounted for about 50% of mistakes in such surveys [2, 3], although figures between 20% and 25% were also reported, depending on the medical setting and the materials used to determine the rate of diagnostic errors [2]. Studies of clinical practice based on autopsies, second opinions, or case reviews yielded different diagnostic error rates, depending on the clinical discipline. In perceptual disciplines, such as dermatology, pathology, or radiology, diagnostic errors occurred in 2%–5% of cases [2], while in clinical fields requiring more data gathering and synthesis, up to 15% of patients might suffer from a diagnostic error. Schiff et al. [4] reported that the most common missed or delayed diagnoses were pulmonary embolism (4.5%), drug reactions or overdose (4.5%), lung cancer (3.9%), colorectal cancer (3.3%), acute coronary syndrome (3.1%), breast cancer (3.1%), and stroke (2.6%).

The frequency of adverse events due to diagnostic errors is more difficult to assess. Retrospective studies indicate that more than 8% of adverse events and up to 30% of malpractice claims are related to diagnostic errors [2], but prospective studies are lacking. This brings confusion about the definition of diagnostic errors, since they may not be considered as such in some studies if there was no harm.

What are the mechanisms of diagnostic errors? In a study of 100 errors in internal medicine defined as “autopsy discrepancies, quality assurance activities, and voluntary reports,” Graber et al. [5] tried to determine the contribution of system-related and cognitive aspects to diagnostic error. Causes of diagnostic errors involved cognitive causes in 28% of the cases, the context or the system in 19%, and mixed causes in 46% [5]. Thus, cognitive factors, that is, how doctors think, were involved in almost 75% of the cases. This reinforces the importance of understanding physicians’ thinking, decision making, and the processes of clinical reasoning, which will be addressed in the following section of this paper.

Mechanisms of cognitive errors

Reasoning process

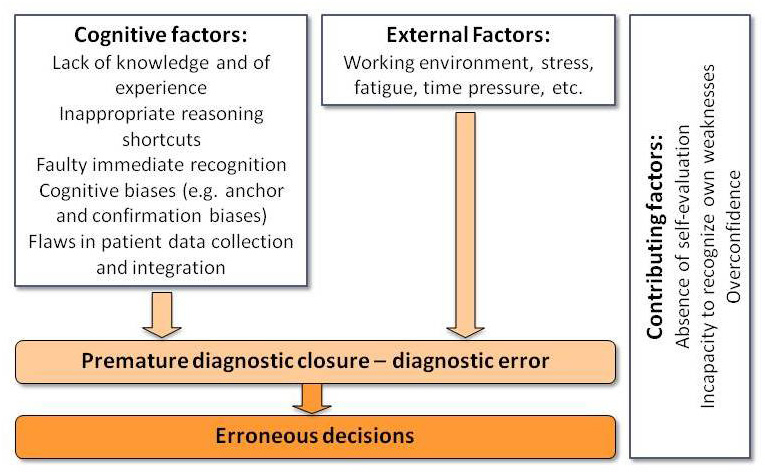

A comprehensive review of knowledge on clinical reasoning is beyond the scope of this article and we summarise here some main aspects largely supported by several decades of research in cognitive psychology. For a more detailed discussion, we refer the reader to previous published reviews [6–10]. When the physician faces a complaint or a constellation of information about a patient in a given context, two main types of clinical reasoning processes can take place in his mind, often unconsciously, depending on his familiarity with the problem encountered (fig. 1). If the clinician has already met a similar clinical picture, an automatic, intuitive, non-analytical process will take place, leading to the immediate recognition of the clinical constellation and the generation of a working diagnostic hypothesis. This process may consist of recognising a group of specific features of a case (pattern recognition) or a similar case previously encountered (instances).

Figure 1

Schematic representation of the dual process of reasoning including immediate recognition of clinical picture (non-analytic process, right boxes) and hypothetico-deductive process (analytic process, left boxes). There is a high level of interaction between both processes in practice.

A similar figure has been published in: Nendaz M. Éducation et qualité de la décision médicale: un lien fondé sur des preuves existe-t-il? La Revue de Médecine Interne 2011;32(7):436–442 [6]. Copyright © 2010, Société nationale française de médecine interne (SNFMI). Published by Elsevier Masson SAS. All rights reserved. Reprinted with permission.

Figure 2

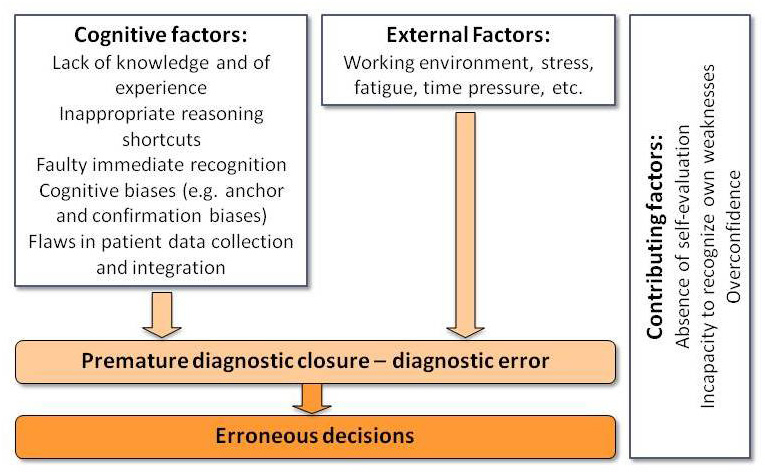

Cumulative conditions conducting to diagnostic and decision errors.

When the physician is less familiar with the clinical problem, or if the case is more complex, a more analytical process takes place (hypothetico-deductive process). As soon as a complaint is presented by the patient, one or more diagnostic hypotheses arise in the mind of the physician, based on the mental representation built about the problem. This mental representation can take many forms. It may consist of prototypical diagnoses about similar situations (e.g., chest pain triggers the prototype of myocardial infarction) [11]. It may include knowledge networks containing a blend of various clinical situations experienced before (scripts) [12, 13]. It may result from the transformation of the information transmitted by the patient into semantically more abstract terms (e.g., “pain, swelling and redness of left knee that appeared at 2 a.m.” becomes in the mind of the physician “acute monoarthritis of a large joint”) [14]. The diagnostic hypotheses are used to frame the collection of additional information from the patient. The obtained information is interpreted to judge whether they fit the tested hypotheses and to verify whether these hypotheses must be maintained, excluded, or tested by the collection of additional information. This process goes on until the physician has reached the final working diagnostic hypothesis (fig. 1).

Although both approaches may be described separately, they are not mutually exclusive in practice since there is a high level of interaction between analytical and non-analytical processes. In “dual-process theory” [15, 16], the physician goes back and forth between the recognition of features stored in his memory and the verification of his hypotheses by a more analytical approach. The use of both approaches, compared to the use of either one, has been associated with better diagnostic outcomes [17, 18].

Errors related to reasoning biases

Based on this knowledge about clinical reasoning, it is now possible to analyse the potential flaws leading to diagnostic errors. As developed above, the immediate recognition process is holistic and may even include patient context (e.g., his profession) in the memorised instance of his medical condition [19]. This immediate and quick process may, at times, represent a pitfall and lead to error. In the case presented above, the overall picture looked like the many classical instances of viral meningitis the emergency team had already encountered, making the physicians consider this diagnosis first. However, although the reasoning process itself may be responsible for the error, a more complex imbalance between intuition, analysis, values, and emotion in a given context usually takes place [20].

Another source of reasoning error is the faulty collection of clinical information and the inadequate value attributed to that information by the physician. Several studies showed that the quality of the working diagnostic hypotheses tested during a medical encounter influenced the relevance of the information collected or recognised in a patient case [21]. For example, if the physician thinks about brucellosis, he will ask for animal contacts; or if he thinks about pericarditis, he will hear the light pericardial rub another colleague would have missed. Even more, the relevant information may be available but the physician ignores it because it does not fit his hypotheses. In the case presented in introduction, thinking initially about the possibility of a cervical infectious process would have lead the clinician to ask more questions about the cervical pain and the forearm wound, to establish the precise timing of the events, and to better examine the patient’s neck. Subsequently, these findings would have probably widened the differential diagnosis if valued by the physician. The majority of cognitive errors are not related to lack of knowledge (3%) but to a flaw in data collection (14%), data integration (50%) and data verification (33%) [5, 22]. This is the case in different fields such as internal medicine [5], anaesthesiology, and neurology. The number of cognitive errors during a reasoning process may even predict the occurrence of events harming the patient [23].

One may thus describe several biases in the medical reasoning process, related to hypothesis generation and clinical information collection. Some of them are related to the way physicians behave in practice by using reasoning shortcuts, named heuristics [24]. These are real strategies, although mainly unconscious, allowing physicians to quickly make decisions in a busy practice despite uncertainty. If these shortcuts are useful and necessary, they may also, sometimes, lead to reasoning and decision errors [25–27]. Comprehensive lists of heuristics and biases have been published elsewhere [27, 28] and table 1 summarises those more specifically related to diagnostic reasoning.

Other factors may also modulate clinical reasoning and decision making. For example, decisions about coronary diseases or other illnesses may vary, depending on physician and patient characteristics. Influential factors include physicians’ age, gender, experience, medical specialty, resistance to stress, or attitude towards risks, among many others. Significant patient characteristics involve age, gender, presence of an addiction, socio-economical status, and ethnicity. Many of these factors may be responsible for the visceral bias, when the interaction between physicians and patients induces emotions that may influence the diagnostic and decision processes. Political and medico-economic considerations, industry pressure, and other external factors may also at times be influential [26].

Using our case to illustrate some heuristics or biases may translate into the following fictive scenario: because the emergency physician who saw this patient first recently admitted a case of viral meningitis, he considered this diagnosis more likely (availability bias) without including in his thinking the spine pain, the previous forearm wound, and the duration of the symptoms (anchoring bias). He only considered the results of the lumbar puncture to confirm his hypothesis, ignoring the other clinical information (confirmation bias). This prevented him to widen the differential diagnosis (premature closure). Because he had already spent time and effort to manage this patient, he was unwilling to consider any other diagnostic option (sunk costs bias), particularly more serious diagnoses because he had the same age as this patient (visceral bias). Moreover, many other patients in the emergency room were waiting and the chief of the department wanted a quick patient triage and orientation (stress and external factors).

In summary, several successive, cumulative factors may make the physician depart from a correct process and tend to a premature diagnostic closure and diagnostic error. If the physician has not the ability to self-reflect and recognise the presence of an error, faulty decisions will follow (fig. 2). Premature closure is a particularly important event in this sequence to diagnostic error. It happens when the physician accepts a diagnosis before it has been completely verified, allowing the following maxim to apply: “When the diagnosis is made, the thinking stops” [28]. Premature closure may be the consequence of several biases and plays a central role by preventing physicians to consider other possibilities and remain open-minded. It is also the target of several strategies aiming at reducing error rates, as developed below. In addition to the different flaws described above, additional factors may be considered, such as personal traits of the physician, overconfidence, inability to recognise own weakness through self-evaluation [2], and hierarchical relationship leading to blind obedience to a colleague or an “expert”.

|

Table 1: Some heuristics and biases related to diagnostic reasoning (inspired from references [26–28]). |

| Anchoring bias |

To be unable to adjust the initial diagnostic hypothesis when further information (e.g. test results) becomes available. |

| Availability bias |

To consider a diagnosis more likely because it readily comes to mind. For example a recent and striking experience with a rare disease may make the physician overestimate the frequency of this disease for the next patient. |

| Confirmation bias |

To look only for symptoms or signs that may confirm a diagnostic hypothesis, or to interpret clinical findings only to support this hypothesis, without looking for, or even disregarding, disconfirming evidence. |

| Diagnosis momentum bias |

To consider a diagnosis as definite because a diagnostic label attached to a patient is transmitted again and again by all persons taking care of this patient (“sticky labels”). |

| Framing effect |

To be influenced by the way the problem is framed. For example, a survival rate of 60% may be perceived differently from a mortality rate of 40%. |

| Gambler’s fallacy |

To believe that if the same diagnosis occurs in several successive patients, it has less probability to occur with the following one. Thus, the pretest probability that a patient will have a particular diagnosis may be influenced by the diagnosis of precedent patients. |

| Multiple alternatives bias |

When multiple diagnostic options are possible, to simplify the differential diagnosis by reverting to a smaller subset with which the physician is familiar; this may result in inadequate consideration of other possibilities. |

| Outcome bias |

To opt for a diagnosis with better outcome for the patient, rather than for those associated with bad outcomes. To value more what the physician hopes rather than what the clinical data suggest, leading to minimise serious diagnoses. |

| Playing the odds bias (or frequency gambling bias) |

In equivocal or ambiguous disease presentations, to opt for a benign diagnosis on the assumption that it is more likely than a serious one.

This bias is opposed to the rule out worst-case scenario strategy. |

| Posterior probability error |

When a particular diagnosis has occurred several times for a patient, to assume that it will be again the case with the same presenting symptoms. For example, to assume that dyspnea is due to cardiac insufficiency because a patient has already suffered of acute cardiac failure several times, while it may be pulmonary embolism. |

| Representativeness bias |

To consider only prototypical manifestations of diseases, thus missing atypical variants |

| Search satisfying bias |

To stop considering other simultaneous diagnoses once a main diagnosis is made, thus leading to miss comorbidities, complications, or additional diagnoses. |

| Sunk costs bias |

To have difficulty to consider alternatives when a clinician has invested time, efforts, and resources to look for a particular diagnosis

Confirmation bias may be a manifestation of this unwillingness to discard a failing hypothesis. |

| Visceral bias |

To favour a diagnosis or to discard other ones because of excessive emotional involvement with the patient. Positive or negative feelings towards a patient, as well as a priori related to, for example addiction or risky behaviours, may influence the diagnostic process. |

Acting against diagnostic errors

Three levels of action may be considered for diagnostic errors: a) providing physicians with “debiasing” tools to use during work; b) training clinical reasoning by using the available evidence; c) improving the working environment and the systems.

At the workplace, while reasoning and decision making take place

Several authors [29, 30] propose to make physicians more conscious about situations increasing the risk of error, such as patients inducing a visceral bias, and about their own processes of clinical reasoning, including pitfalls and heuristics. According to these strategies, making physicians aware of their thinking process and of their biases will help them recognise their own potential reasoning flaws while they are working (“situational awareness”). This metacognitive knowledge could be brought by explicit training and applied in medical practice by “forcing strategies” aimed at preventing diagnostic errors [31]. The impact of this approach has shown encouraging results in some fields [32] but needs further investigation, due to the limited number of impact studies [33]. A similar strategy consists of explicitly considering the future consequences of the diagnosis or the decisions made (prospective hindsight), particularly in case this diagnosis would be wrong. In this approach aimed at preventing premature diagnostic closure, the physicians should systematically ask themselves the following questions forcing them to explore other diagnostic possibilities e.g. are all the patient’s findings accounted for by my diagnosis?, Does my hypothesis explain the patient’s findings?, What are the consequences of this diagnosis?, What diagnosis should I not miss?, What is the differential diagnosis? And if it is not what I think, what else could it be? This approach has proven useful in medical students [34] but should be more systematically studied in physicians’ practice.

To help physicians increase their situational awareness and prospective insight, the use of checklists has been advocated to force the approach to specific patient problems [35]. These checklists address three domains of optimisation: a) cognitive approach; b) differential diagnosis; c) specific information and pitfalls related to selected diseases. The development of such materials implies a huge effort by multidisciplinary teams [23], which may impede the generalisation of such investment. However, given the important patient safety issue at stake, further efforts should be made to study the impact of such reasoning supports.

Reflective practice is a more comprehensive approach encompassing several steps [36] including a phase of doubt when facing a problem, trying to understand the nature of the problem, finding solutions to the problem, verifying solutions and consequences, and testing results of hypotheses. Applied to clinical reasoning, this process encompasses metacognition described above so that physicians “explore the problem at hand while simultaneously examining one’s own reasoning. When engaged in reflection for solving a case, physicians tend to more carefully consider case findings, search for alternative diagnoses, and examine their own thinking” [37] (p. 1210). Training physicians to use such an approach leads to better diagnostic ability, particularly with more difficult cases [38]. This approach may counteract the effects of the availability bias in first- and second-year residents [39]. While its application to a busy working environment may be limited, training this approach may increase the physicians’ chance of using this process more automatically [40].

Training clinical reasoning

Beside actions in the working environment, training of clinical reasoning may also help prevent diagnostic errors. Based on the available scientific evidence, several components should be considered to improve the quality of training at the pre-graduate, postgraduate and continuous levels. These elements are summarised hereafter as a series of recommendations.

Use teaching methods relying on available evidence

Clinical reasoning seminars starting from a patient complaint and allowing the learners to sequentially and explicitly evaluate diagnostic hypotheses and request additional information are largely used in many institutions [41]. This structured activity involves several aspects related to diagnostic competency, such as the use of a dual process of clinical reasoning including intuitive and more analytical approaches [17, 18]. A recent work [42] tried to introduce in these seminars some aspects of the reflective approach described previously [38, 39]. Using case-based clinical reasoning seminars, an intervention designed to bring medical students insight into cognitive features of their reasoning improved the quality of the differential diagnosis at the time of case synthesis in the medical chart [42]. Another study aimed at using diagnostic hypotheses to frame physical examination also yielded positive results in students’ competence [43].

The way new knowledge is learned may also influence the quality of memorisation. The traditional, sequential acquisition of knowledge about diseases is less efficient than an approach allowing comparison and contrast of these diseases. For example, learning the EKG characteristics of left ventricular hypertrophy, then of myocardial infarction, then of pericarditis is less efficient than a simultaneous acquisition of this knowledge allowing for comparison and contrast of the EKG features of these diseases [18, 44].

It should be stressed, however, that learning generic reasoning processes without medical content is not efficient. Reasoning process cannot be separated from the specific content knowledge about each case, a well-known notion named “case specificity”. Thus, teaching activities focusing on processes only, bring a necessary but insufficient training with the risk of limited efficacy [45].

Practice in clinical context and provide feedback

Due to diminished patient availability and competition between bedside teaching and economic issues in clinical centres, there may be a tendency to keep learners away from clinical settings. However, early clinical immersion is necessary for the development of professional competences. Systematic reviews have shown that it increases the integration in a medical environment, the understanding of the medical profession and of the health care system, and the ability to contextualise knowledge [46, 47]. Additionally, it increases the relevance of the clinical information collected from the patient and the quality of clinical reasoning [48]. Being in a clinical environment brings the learner several dimensions related to his personal implication in patient care, but a condition for increased learning is the presence of supervision and feedback, which implies the presence of physicians dedicated to education and appropriately trained to provide adequate bedside teaching.

Train the trainers

Many physicians think that because they are good clinicians or researchers they are good teachers. They often use personal opinions or beliefs on medical education issues, instead of searching for evidence as they would do for medical activities [49]. There is a need to provide clinician educators with abilities in medical education [50]. According to a systematic review [50], such programmes are associated with greater satisfaction and self-confidence of teachers, better teaching competencies and behaviours, and a positive impact on learners.

Acting on systems and environment

A detailed review on systems is beyond the scope of this paper. However, the working environment may also influence the quality of physicians’ thinking. The traditional “Swiss cheese” model in which, not only one, but a succession of events occurs, also applies to diagnostic errors. For example, the following sequence may happen, involving factors that may contribute to final diagnostic error or wrong decisions: lack of experience of the physician with a particular case, lack of supervision, lack of communication within the health care provider team, work overload and busy environment, stress and fatigue, cognitive biases or inappropriate use of heuristics (fig. 2). Several approaches have been developed to support the clinicians. They encompass diagnostic support systems (DSS), alerts within electronic charts, external verification of the patient management and feedback, and direct coaching. Research is still ongoing to determine their feasibility and their impact on the quality of patient care.

Conclusion

After more than three decades of research about clinical reasoning and diagnostic processes, there is accumulated evidence about their mechanism and their flaws. Diagnostic errors are mainly cognitive in nature, not so much involving knowledge deficiency, but related to the appropriateness of data collection from the patient, as well as data integration and verification of diagnostic hypotheses. Their risk of occurrence increases in the real field of busy practice under time pressure, when physicians use reasoning shortcuts (heuristics) and are subject to biases. The latter may be related to their personal traits (e.g., overconfidence), their mental representation of diseases (e.g., anchoring bias), and their environment, but many induce a premature diagnostic closure. Ways to prevent these errors, such as self-awareness of physicians, training in medical education, and external support are reported. Although some evidence already exists about the efficiency of some approaches, there is still space for further research to assess the impact of different interventions aimed at reducing diagnostic errors. Additionally, other conditions must be fulfilled to increase the chance that such interventions bring some change. First, physicians should be interested in their own ways of making diagnoses and decisions. They should adopt an evidence-based attitude towards medical education issues instead of relying merely on their own opinions. Second, the teaching clinical structures and medical schools, as well as medical professional societies should support medical education research, train their clinicians as supervisors and teachers, and valorise clinical supervision and feedback in clinical contexts. Finally, pre-, post-, and continuous education should provide more specific training to help physicians detect and correct their own reasoning flaws. With all these conditions fulfilled, one may hope to really make a difference on the burden represented by diagnostic errors and thus increase patient safety.

References

1 Blendon RJ, DesRoches CM, Brodie M, Benson JM, Rosen AB, Schneider E, et al. Views of practicing physicians and the public on medical errors. N Engl J Med. 2002;347(24):1933–40.

2 Berner ES, Graber ML. Overconfidence as a cause of diagnostic error in medicine. Am J Med. 2008;121(5 Suppl):S2–23.

3 Gandhi TK, Kachalia A, Thomas EJ, Puopolo AL, Yoon C, Brennan TA, et al. Missed and delayed diagnoses in the ambulatory setting: a study of closed malpractice claims. Ann Intern Med. 2006;145(7):488–96.

4 Schiff GD, Hasan O, Kim S, Abrams R, Cosby K, Lambert BL, et al. Diagnostic error in medicine: analysis of 583 physician-reported errors. Arch Intern Med. 2009;169(20):1881–7.

5 Graber ML, Franklin N, Gordon R. Diagnostic error in internal medicine. Arch Intern Med. 2005;165(13):1493–9.

6 Nendaz M. Medical education and quality of decision-making: Is there an evidence-based relationship? Rev Med Interne. 2011;32(7):436–42.

7 Nendaz M, Charlin B, Leblanc V, Bordage G. Le raisonnement clinique: données issues de la recherche et implications pour l’enseignement. Ped Med. 2005;6:235–54.

8 Nendaz MR, Gut AM, Perrier A, Louis-Simonet M, Blondon-Choa K, Herrmann FR, et al. Beyond clinical experience: features of data collection and interpretation that contribute to diagnostic accuracy. J Gen Intern Med. 2006;21(12):1302–5.

9 Elstein AS. Thinking about diagnostic thinking: a 30-year perspective. Adv Health Sci Educ Theory Pract. 2009;14(Suppl 1):7–18.

10 Norman G. Research in clinical reasoning: past history and current trends. Med Educ. 2005;39(4):418–27.

11 Bordage G. Prototypes and semantic qualifiers: from past to present. Med Educ. 2007;41(12):1117–21.

12 Charlin B, Tardif J, Boshuizen HP. Scripts and medical diagnostic knowledge: theory and applications for clinical reasoning instruction and research. Acad Med. 2000;75(2):182–90.

13 Schmidt HG, Norman GR, Boshuizen HP. A cognitive perspective on medical expertise: theory and implications. Acad Med. 1990;65(10):611–21.

14 Chang RW, Bordage G, Connell KJ. The importance of early problem representation during case presentations. Acad Med. 1998;73(10 Suppl):S109–11.

15 Pelaccia T, Tardif J, Triby E, Charlin B. An analysis of clinical reasoning through a recent and comprehensive approach: the dual-process theory. Med Educ Online. 2011;16.

16 Norman G. Dual processing and diagnostic errors. Adv Health Sci Educ Theory Pract. 2009;14(Suppl 1):37–49.

17 Norman GR, Eva KW. Diagnostic error and clinical reasoning. Med Educ. 2010;44(1):94–100.

18 Ark TK, Brooks LR, Eva KW. The benefits of flexibility: the pedagogical value of instructions to adopt multifaceted diagnostic reasoning strategies. Med Educ. 2007;41(3):281–7.

19 Norman G, Young M, Brooks L. Non-analytical models of clinical reasoning: the role of experience. Med Educ. 2007;41(12):1140–5.

20 Lucchiari C, Pravettoni G. Cognitive balanced model: a conceptual scheme of diagnostic decision making. J Eval Clin Pract. 2011;18(1):82–8.

21 Brooks LR, LeBlanc VR, Norman GR. On the difficulty of noticing obvious features in patient appearance. Psychol Sci. 2000;11(2):112–7.

22 Bordage G. Why did I miss the diagnosis? Some cognitive explanations and educational implications. Acad Med. 1999;74(10 Suppl):S138–43.

23 Zwaan L, Thijs A, Wagner C, van der Wal G, Timmermans DR. Relating faults in diagnostic reasoning with diagnostic errors and patient harm. Acad Med. 2012;87(2):149–56.

24 Kahneman D, Slovic P, Tversky A. Judgment Under Uncertainty: heuristics and biases. Cambridge, UK: Cambridge University Press 1982.

25 Elstein A, Schwartz A, Nendaz M. Medical decision making. In: Norman G, van der Vleuten C, Newble D, eds. International Handbook of Research in Medical Education. Boston: Kluwer 2002:231–62.

26 Junod A. Décision médicale ou la quête de l’explicite. Genève: Médecine et Hygiène 2007.

27 Gorini A, Pravettoni G. An overview on cognitive aspects implicated in medical decisions. Eur J Intern Med. 2011;22(6):547–53.

28 Croskerry P. The importance of cognitive errors in diagnosis and strategies to minimize them. Acad Med. 2003;78(8):775–80.

29 Croskerry P. Clinical cognition and diagnostic error: applications of a dual process model of reasoning. Adv Health Sci Educ Theory Pract. 2009;14(Suppl 1):27–35.

30 Graber ML. Educational strategies to reduce diagnostic error: can you teach this stuff? Adv Health Sci Educ Theory Pract. 2009;14(Suppl 1):63–9.

31 Croskerry P. Cognitive forcing strategies in clinical decisionmaking. Ann Emerg Med. 2003;41(1):110–20.

32 Hall KH. Reviewing intuitive decision-making and uncertainty: the implications for medical education. Med Educ. 2002;36(3):216–24.

33 Sherbino J, Dore KL, Siu E, Norman GR. The effectiveness of cognitive forcing strategies to decrease diagnostic error: an exploratory study. Teach Learn Med. 2011;23(1):78–84.

34 Coderre S, Wright B, McLaughlin K. To think is good: querying an initial hypothesis reduces diagnostic error in medical students. Acad Med. 2010;85(7):1125–9.

35 Ely JW, Graber ML, Croskerry P. Checklists to reduce diagnostic errors. Acad Med. 2011;86(3):307–13.

36 Mamede S, Schmidt HG. The structure of reflective practice in medicine. Med Educ. 2004;38(12):1302–8.

37 Mamede S, Schmidt HG, Rikers RM, Penaforte JC, Coelho-Filho JM. Influence of perceived difficulty of cases on physicians’ diagnostic reasoning. Acad Med. 2008;83(12):1210–6.

38 Mamede S, Schmidt HG, Penaforte JC. Effects of reflective practice on the accuracy of medical diagnoses. Med Educ. 2008;42(5):468–75.

39 Mamede S, van Gog T, van den Berge K, Rikers RM, van Saase JL, van Guldener C, et al. Effect of availability bias and reflective reasoning on diagnostic accuracy among internal medicine residents. JAMA. 2010;304(11):1198–203.

40 Aronson L. Twelve tips for teaching reflection at all levels of medical education. Med Teach. 2011;33(3):200–5.

41 Kassirer JP. Teaching clinical medicine by iterative hypothesis testing. Let’s preach what we practice. N Engl J Med. 1983;309(15):921–3.

42 Nendaz MR, Gut AM, Louis-Simonet M, Perrier A, Vu NV. Bringing explicit insight into cognitive psychology features during clinical reasoning seminars: a prospective, controlled study. Educ Health (Abingdon). 2011;24(1):496.

43 Yudkowsky R, Otaki J, Lowenstein T, Riddle J, Nishigori H, Bordage G. A hypothesis-driven physical examination learning and assessment procedure for medical students: initial validity evidence. Med Educ. 2009;43(8):729–40.

44 Hatala RM, Brooks LR, Norman GR. Practice makes perfect: the critical role of mixed practice in the acquisition of ECG interpretation skills. Adv Health Sci Educ Theory Pract. 2003;8(1):17–26.

45 Nendaz MR, Bordage G. Promoting diagnostic problem representation. Med Educ. 2002;36(8):760–6.

46 Dornan T, Littlewood S, Margolis SA, Scherpbier A, Spencer J, Ypinazar V. How can experience in clinical and community settings contribute to early medical education? A BEME systematic review. Med Teach. 2006;28(1):3–18.

47 Littlewood S, Ypinazar V, Margolis SA, Scherpbier A, Spencer J, Dornan T. Early practical experience and the social responsiveness of clinical education: systematic review. BMJ. 2005;331(7513):387–91.

48 Rudaz A, Gut AM, Louis-Simonet M, Perrier A, Vu NV, Nendaz MR. Acquisition of clinical competence: added value of clerkship real-life contextual experience. Med Teach. 2012:e1–e6.

49 Harden RM, Grant J, Buckley G, Hart IR. Best evidence medical education. Adv Health Sci Educ Theory Pract. 2000;5(1):71–90.

50 Steinert Y, Mann K, Centeno A, Dolmans D, Spencer J, Gelula M, et al. A systematic review of faculty development initiatives designed to improve teaching effectiveness in medical education: BEME Guide No. 8. Med Teach. 2006;28(6):497–526.